Key Components of Hadoop MapReduce

To understand how Hadoop MapReduce works, let’s take a closer look at its key components:

Mapper

The Mapper is responsible for processing a subset of input data and generating intermediate key-value pairs. It applies the map function defined by the user to each input record.

Reducer

The Reducer receives the intermediate key-value pairs produced by the Mapper and performs the final aggregation or transformation. It applies the reduce function defined by the user to the input data and produces the final output.

Combiner

The Combiner is an optional component that runs on the map nodes and performs a local reduction of the intermediate data. It helps in reducing the amount of data transferred across the network during the shuffle phase.

Partitioner

The Partitioner ensures that the output of the Mapper is evenly distributed across the Reducers. It assigns each intermediate key-value pair to a specific Reducer based on the hash value of the key.

InputFormat

The InputFormat determines how the input data is read and split into input records for processing by the Mappers. It defines the logical view of the input data.

OutputFormat

The OutputFormat specifies how the final output is written or stored after the Reduce phase. It defines the format and location of the output data.

MapReduce Workflow

The MapReduce workflow consists of several phases that collectively process and transform the input data. Let’s understand each phase in detail:

Input and Output Phases

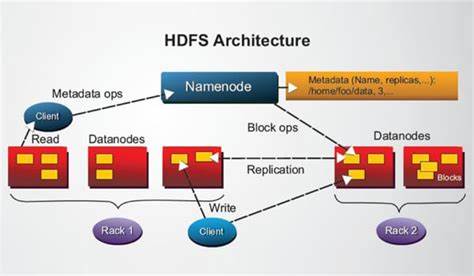

In the input phase, the input data is loaded from the Hadoop Distributed File System (HDFS) or other storage systems. The InputFormat splits the input data into manageable chunks and assigns them to the Mappers.

In the output phase, the final output is generated by the Reducers and written to the specified location or system.

Map Phase

During the Map phase, the input data is processed in parallel by multiple Mappers. Each Mapper applies the map function to its assigned portion of the input data and produces intermediate key-value pairs.

Reduce Phase

In the Reduce phase, the intermediate key-value pairs generated by the Mappers are grouped by key and processed by the Reducers. The reduce function is applied to the grouped data to produce the final output.

Benefits of Using Hadoop MapReduce

Hadoop MapReduce offers several advantages for big data processing:

Scalability and Performance

MapReduce allows processing large datasets by distributing the workload across multiple machines in a cluster. It enables horizontal scalability, ensuring that processing speed increases as more machines are added to the cluster.

Fault Tolerance

Hadoop MapReduce provides built-in fault tolerance mechanisms. If a node fails during processing, the framework automatically redistributes the workload to other available nodes, ensuring the job’s completion without data loss.

Data Processing Flexibility

MapReduce supports a wide range of data processing tasks, including filtering, aggregation, sorting, and more. It can handle both structured and unstructured data, making it versatile for various use cases.

Real-World Applications of Hadoop MapReduce

Hadoop MapReduce finds application in various domains. Some popular use cases include:

Log Analysis

MapReduce can efficiently process large log files generated by systems, applications, or websites. It enables organizations to extract valuable insights from log data, identify patterns, and troubleshoot issues.

Recommendation Systems

E-commerce platforms and content streaming services utilize MapReduce for building recommendation systems. It analyzes user behavior, preferences, and historical data to provide personalized recommendationsto users, enhancing the overall user experience.

Fraud Detection

MapReduce plays a vital role in fraud detection and prevention. By analyzing large volumes of transactional data, it can identify patterns and anomalies that indicate fraudulent activities. This helps financial institutions and e-commerce platforms mitigate risks and protect their customers.

Challenges and Limitations of Hadoop MapReduce

While Hadoop MapReduce offers numerous benefits, it also has some challenges and limitations:

Complex Programming Model

Developing MapReduce applications requires understanding the programming model and writing custom code. This can be challenging for developers who are not familiar with distributed computing concepts.

High Latency for Small Jobs

MapReduce is designed for processing large-scale data, and it may not be suitable for small, real-time tasks. The overhead involved in setting up and managing MapReduce jobs can result in high latency for small job sizes.

Lack of Real-Time Processing

MapReduce operates in batch processing mode, which means it is not ideal for real-time data processing scenarios. Applications requiring instant insights or low-latency processing may need to explore alternative technologies.

Conclusion

Hadoop MapReduce is a powerful framework for processing and analyzing big data. Its ability to distribute data processing tasks across a cluster of machines enables efficient handling of large datasets. While it offers scalability, fault tolerance, and flexibility, it also has challenges in terms of complexity and real-time processing. Understanding the fundamentals of Hadoop MapReduce is crucial for organizations seeking to leverage big data for valuable insights and decision-making.