In today’s data-driven world, managing and processing large volumes of data has become a critical challenge for organizations across various industries. To tackle this challenge, technologies like Hadoop and MapReduce have emerged as powerful tools. In this article, we will explore the concept of Hadoop MapReduce, its significance, key components, workflow, benefits, real-world applications, and limitations.

Introduction to Hadoop MapReduce

A distributed cluster of computers can process and analyze enormous volumes of data simultaneously using the Hadoop MapReduce software framework and architecture. It is an integral part of the Apache Hadoop ecosystem, which provides a scalable and fault-tolerant platform for big data processing.

Understanding the Basics of Hadoop

What is Hadoop?

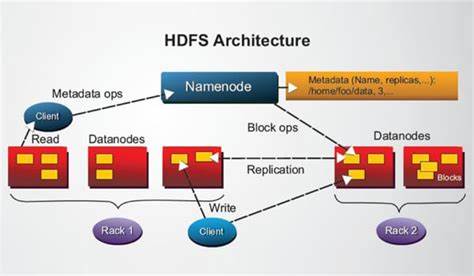

Large datasets can be distributedly stored and processed using Hadoop, an open-source framework, across clusters of inexpensive hardware. It consists of the Hadoop Distributed File System (HDFS) for storing data and the Hadoop MapReduce framework for processing data in parallel.

Why is Hadoop important?

Hadoop has gained immense popularity due to its ability to handle the three V’s of big data: volume, velocity, and variety. It allows organizations to store, process, and analyze massive amounts of structured and unstructured data efficiently. With Hadoop, businesses can gain valuable insights, make data-driven decisions, and uncover hidden patterns and correlations.

The MapReduce Paradigm

What is MapReduce?

MapReduce is a programming model and computational algorithm that breaks down a complex data processing task into smaller subtasks, which can be executed in parallel. It comprises two main phases: the Map phase and the Reduce phase.

How does MapReduce work?

A number of parallel map tasks process the input data in chunks during the map phase. Each map task applies a user-defined map function to the input data and produces intermediate key-value pairs. These intermediate results are then grouped based on the keys and passed to the Reduce phase.

In the Reduce phase, the intermediate key-value pairs are processed by reduce tasks. The reduce function combines, summarizes, or transforms the intermediate data to produce the final output. The output is typically stored in a separate location or written to an external system.