Hadoop is an open-source framework designed to process and store large datasets across distributed computing clusters. It provides a scalable, reliable, and cost-effective solution for handling big data. In this article, we will explore the fundamentals of Hadoop, its components, and its applications.

Understanding the Basics of Hadoop

2.1 What is Hadoop?

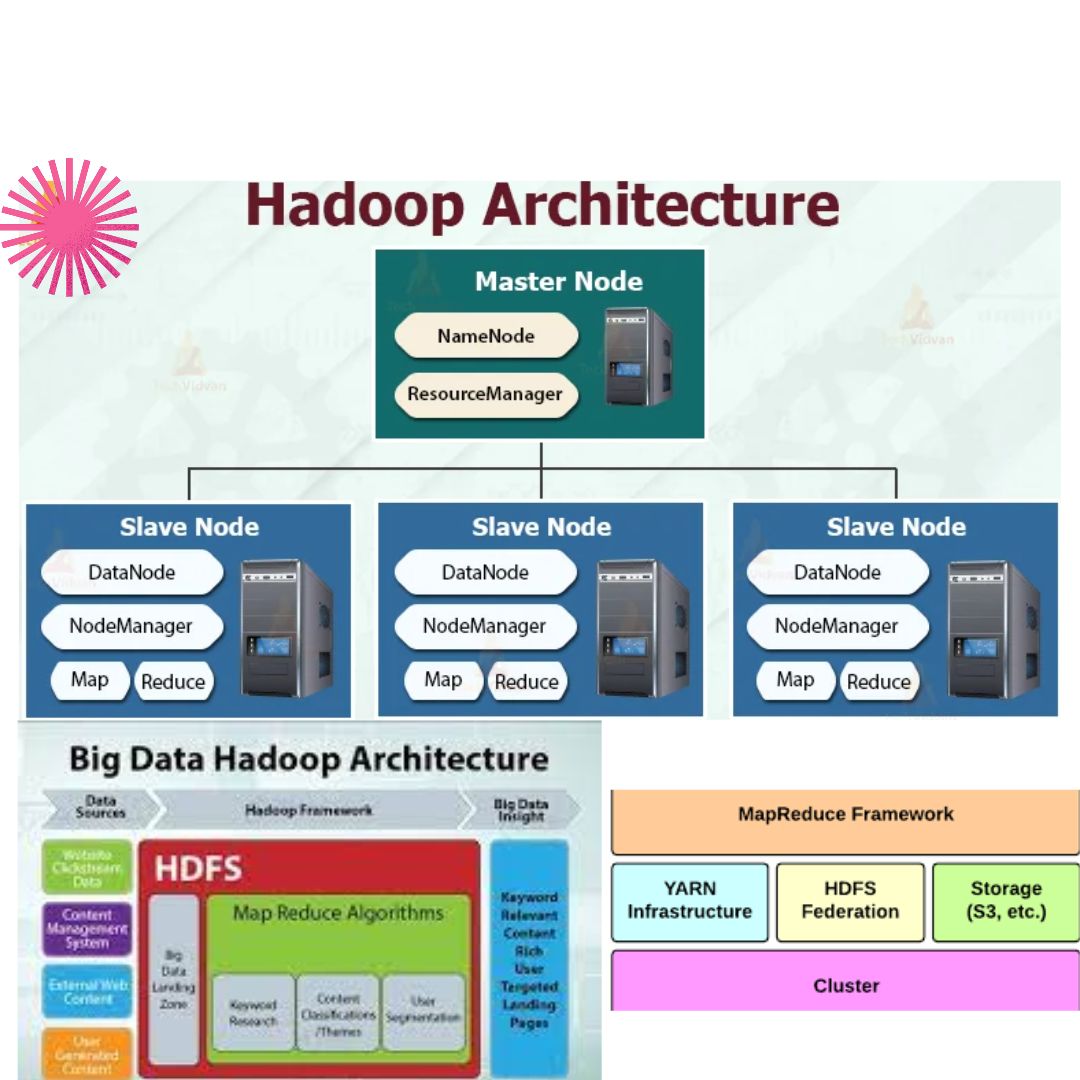

Large datasets can be processed in a distributed fashion across computer clusters thanks to the Hadoop software architecture. There are two key parts to it: the MapReduce programming model for processing the data, and the Hadoop Distributed File System (HDFS) for storing data.

2.2 The Components of Hadoop

Hadoop consists of various components that work together to provide a robust and scalable platform for big data processing. Some of the key components include:

- Hadoop Distributed File System (HDFS): A distributed file system that stores data across multiple machines in a Hadoop cluster.

- MapReduce: A programming model for processing and analyzing large datasets in parallel across a cluster of computers.

- YARN (Yet Another Resource Negotiator): A resource management framework that manages resources in a Hadoop cluster and schedules tasks.

- Hadoop Common: a collection of standard tools and libraries utilized by additional Hadoop modules.

- Apache Hive: A data warehouse infrastructure built on top of Hadoop for querying and analyzing large datasets.

- Apache Pig: A high-level scripting platform for creating MapReduce programs used in Hadoop.

- Apache Spark: a powerful cluster computing system with several uses that can process large amounts of data quickly.

Getting Started with Hadoop

3.1 Setting Up a Hadoop Cluster

To get started with Hadoop, you need to set up a Hadoop cluster. A Hadoop cluster consists of multiple machines, where one machine acts as the master (NameNode) and the others serve as slaves (DataNodes). The master node manages the file system and coordinates data processing tasks.

3.2 Hadoop Distributed File System (HDFS)

HDFS is the primary storage system used by Hadoop. It is designed to store large files and handle data replication across multiple machines. HDFS divides files into blocks and replicates each block across different DataNodes to ensure data reliability and fault tolerance.

Hadoop MapReduce

4.1 MapReduce Concept

MapReduce is a programming model used in Hadoop for processing and analyzing large datasets in parallel. The map phase and the reduction phase are its two key components. In the map phase, data is divided into smaller chunks and processed independently. In the reduce phase, the results from the map phase are aggregated to produce the final output.

4.2 MapReduce Workflow

The MapReduce workflow involves the following steps:

- Input Splitting: The input data is divided into smaller input splits, and each split is assigned to a mapper for processing.

- Mapping: Each mapper processes its input split and produces intermediate key-value pairs.

- Shuffling and Sorting: The intermediate key-value pairs are shuffled and sorted based on the keys.

- Reducing: The reducer processes the sorted key-value pairs and produces the final output.