Hadoop has revolutionized the world of big data processing, providing scalable and distributed computing capabilities. In this article, we will explore what Hadoop is, its key components, its architecture, advantages, use cases, challenges, and its future.

What is Hadoop?

Hadoop is an open-source framework designed for distributed storage and processing of large datasets across clusters of commodity hardware. It was developed by the Apache Software Foundation and has become a prominent player in the big data ecosystem. Hadoop enables businesses to analyze vast amounts of structured and unstructured data, unlocking valuable insights and driving informed decision-making.

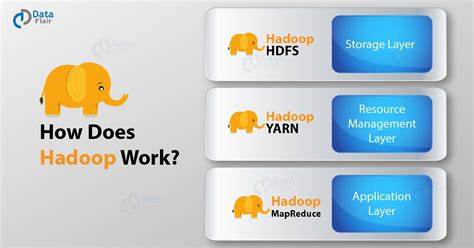

Hadoop’s Key Components

Hadoop consists of several key components that work together to enable efficient data processing. These components include:

1. Hadoop Distributed File System (HDFS)

HDFS is a distributed file system that provides high-throughput access to data across multiple nodes in a Hadoop cluster. It breaks down large files into smaller blocks and replicates them across the cluster for fault tolerance.

2. MapReduce

MapReduce is a programming model and processing engine that allows for parallel processing of large datasets. It divides the processing task into two stages: the map stage, where data is filtered and transformed, and the reduce stage, where the results are aggregated.

Hadoop Cluster Architecture

A Hadoop cluster comprises multiple nodes working together to store and process data. The cluster typically consists of a master node called the NameNode and multiple worker nodes called DataNodes. Whereas the DataNodes hold the actual data, the NameNode controls the file system information.

Advantages of Hadoop

Hadoop offers several advantages that have contributed to its widespread adoption:

- Scalability: Hadoop allows organizations to scale their storage and processing capabilities seamlessly. It can handle petabytes of data across thousands of nodes.

- Cost-effectiveness: Hadoop runs on commodity hardware, making it a cost-effective solution compared to traditional data processing systems.

- Flexibility: Hadoop supports various data types, including structured, semi-structured, and unstructured data. It can process data from diverse sources such as social media, IoT devices, and log files.

Use Cases of Hadoop

Hadoop finds applications in numerous industries and use cases:

- Big Data Analytics: Hadoop enables organizations to perform advanced analytics on large datasets to gain insights and make data-driven decisions.

- Recommendation Systems: Hadoop powers recommendation engines used by e-commerce and streaming platforms to provide personalized suggestions to users.

- Fraud Detection: Hadoop’s ability to process massive amounts of data quickly makes it suitable for detecting fraudulent activities by analyzing patterns and anomalies.

Challenges in Implementing Hadoop

Implementing Hadoop can present some challenges:

- Data Integration: Organizations often face difficulties integrating data from various sources into Hadoop, as the data may be in different formats and structures.

- Skill Gap: Hadoop requires specialized skills and knowledge to set up, configure, and optimize the cluster, which can be a challenge for organizations lacking the necessary expertise.

Security Considerations

As with any technology, security is a crucial aspect of Hadoop implementation. Organizations must consider securing their Hadoop clusters, protecting data privacy, and implementing access controls to prevent unauthorized access and data breaches.

Future of Hadoop

Despite the rise of alternative technologies such as Apache Spark and cloud-based solutions, Hadoop continues to play a vital role in the big data landscape. It is evolving to meet the changing needs of organizations, with improvements in performance, security, and integration with other tools and frameworks.

Conclusion

Hadoop has emerged as a powerful framework for processing big data, enabling organizations to harness the potential of their data assets. With its distributed file system, scalable architecture, and parallel processing capabilities, Hadoop has become a cornerstone of modern data processing and analytics. As technology advances, Hadoop is expected to adapt and remain relevant in the ever-growing field of big data.