Big data has become an integral part of modern business operations, and organizations are constantly seeking ways to harness its power to gain valuable insights. One of the most popular cloud platforms for managing and analyzing big data is Amazon Web Services (AWS). In this article, we will explore the world of AWS Big Data, its importance, and how you can get started with learning it.

The Importance of Learning AWS Big Data

In today’s data-driven world, companies generate and collect vast amounts of data. However, making sense of this data requires powerful tools and infrastructure. AWS Big Data provides a comprehensive suite of services and tools that enable organizations to store, process, analyze, and visualize massive datasets efficiently. By learning AWS Big Data, you can enhance your skills and become proficient in leveraging these services to drive data-driven decision-making and gain a competitive edge.

Getting Started with AWS Big Data

Before diving into AWS Big Data, you need to set up an AWS account. Visit the AWS website (https://aws.amazon.com/) and follow the instructions to create your account. Once your account is set up, you can access the AWS Management Console and start exploring the various services available for big data processing.

Understanding the Basics of Big Data

To make the most out of AWS Big Data, it’s essential to understand the fundamentals of big data. Big data is characterized by the 5 Vs: Volume, Variety, Velocity, Veracity, and Value. Volume refers to the massive amount of data generated; Variety relates to the diverse types and formats of data; Velocity indicates the speed at which data is generated and processed; Veracity focuses on the reliability and accuracy of the data, and Value represents the insights and business value extracted from the data.

AWS Big Data Services and Tools

AWS offers a wide range of services and tools specifically designed for big data processing. Let’s explore some of the key services:

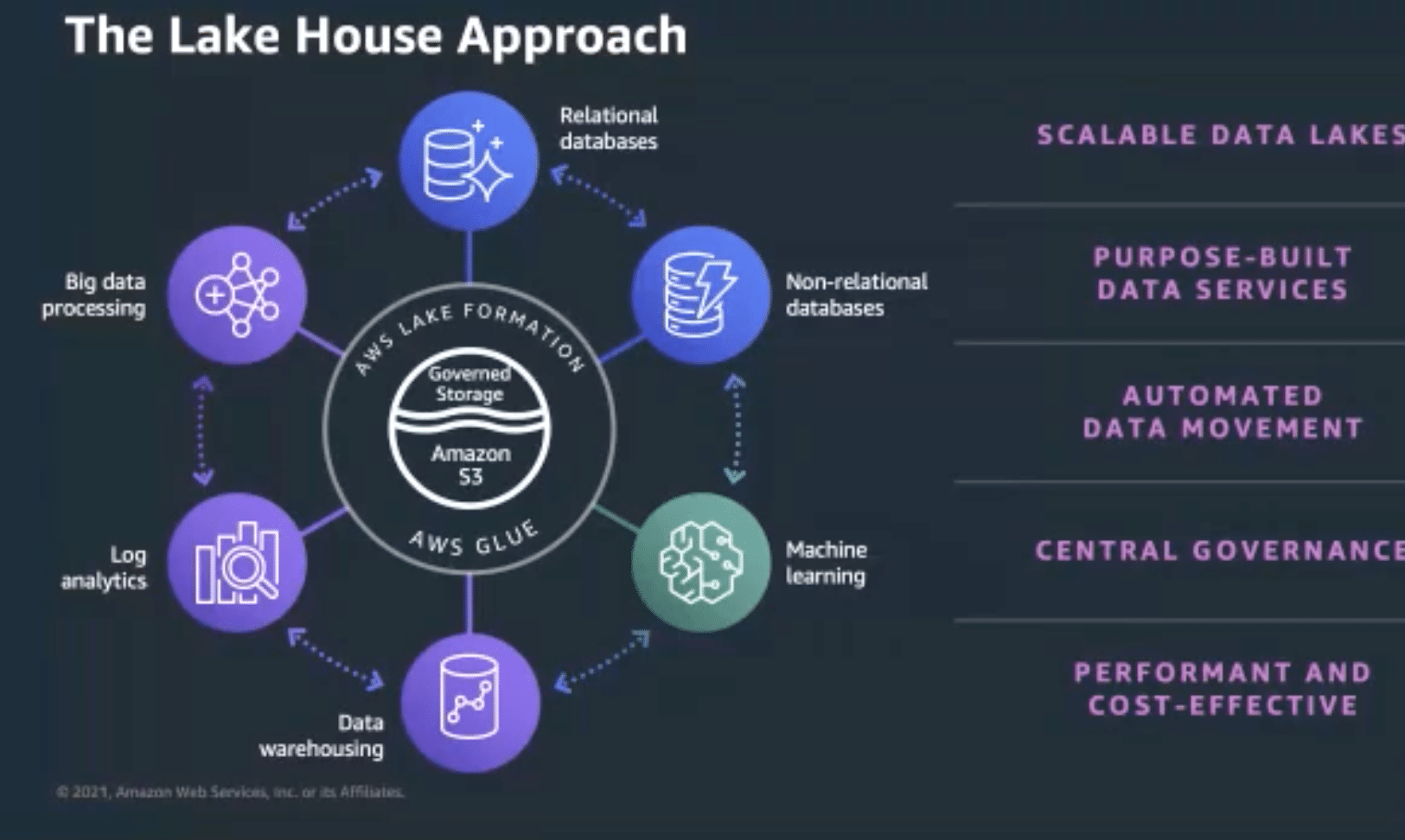

- Amazon S3: Amazon Simple Storage Service (S3) is a scalable and durable object storage service that allows you to store and retrieve any amount of data. It is commonly used for data storage in big data architectures.

- Amazon Redshift: Amazon Redshift is a fully managed data warehousing service that enables you to analyze large datasets with high performance and scalability. It is ideal for running complex analytical queries on structured data.

- Amazon Athena: Amazon Athena is an interactive query service that allows you to analyze data directly from Amazon S3 using standard SQL queries. It eliminates the need for data preprocessing or ETL (Extract, Transform, Load) operations.

- Amazon EMR: Amazon Elastic MapReduce (EMR) is a fully managed big data processing service that simplifies the deployment and management of Apache Hadoop and Apache Spark frameworks. It provides a scalable and cost-effective solution for processing large datasets.

Building a Big Data Pipeline on AWS

To effectively process and analyze big data on AWS, it’s important to establish a well-defined data pipeline. Here are the key components of a typical big data pipeline on AWS:

- Data Ingestion: Ingest data from various sources into the AWS ecosystem. This can include data from databases, streaming platforms, or external sources.

- Data Storage and Management: Store and organize the ingested data in a suitable AWS storage service like Amazon S3 or Amazon Redshift.

- Data Processing and Analysis: Use services like Amazon EMR or Amazon Athena to process and analyze the data. This step may involve tasks such as data transformation, machine learning, or running complex analytical queries.

- Data Visualization and Reporting: Visualize the analyzed data using tools like Amazon QuickSight or integrate AWS services with third-party visualization tools for generating meaningful insights and reports.

Best Practices for AWS Big Data

When working with AWS Big Data, it’s important to follow best practices to ensure optimal performance, security, and cost efficiency. Here are some key considerations:

- Data Security and Compliance: Put in place the necessary security safeguards to safeguard sensitive data. Use encryption, access controls, and data anonymization techniques as needed. Ensure compliance with relevant data protection regulations.

- Scalability and Performance Optimization: Design your big data solutions to scale horizontally and vertically as per demand. Optimize data processing workflows to minimize latency and improve overall performance.

- Cost Optimization: Choose the right AWS services based on your workload requirements. Leverage cost optimization techniques like reserved instances, spot instances, and efficient data storage strategies to reduce expenses.

Real-World Use Cases of AWS Big Data

AWS Big Data has been successfully adopted by organizations across various industries. Here are some real-world use cases:

- E-commerce Analytics: Retail companies leverage AWS Big Data services to analyze customer behavior, improve personalization, and enhance sales forecasting.

- IoT Data Processing: Internet of Things (IoT) devices generate a massive amount of data. AWS Big Data services provide the infrastructure and tools to process and derive insights from IoT data.

- Fraud Detection: Financial institutions utilize AWS Big Data services to detect fraudulent activities by analyzing large volumes of transactional data in real-time.

Conclusion

Learning AWS Big Data opens up a world of possibilities for professionals and organizations seeking to harness the power of big data. By understanding the fundamentals, exploring the AWS Big Data services, and following best practices, you can effectively store, process, analyze, and visualize massive datasets to drive data-driven decision-making. Start your journey into AWS Big Data today and unlock the potential of your data.